Thinking Outside the Bot conference sheds light on the growth of AI -- with amazing demos

More than 100 higher education professionals from HFC and the University of Michigan-Dearborn, along with speakers from UM-Ann Arbor and UM-Flint, attended the “Thinking Outside the Bot: Optimizing A.I. Good While Minimizing the Bad” symposium in mid-September, learning how Generative Artificial Intelligence will impact higher education in accelerating ways.

“Remember, there was a time when many people thought something called the World Wide Web would destroy higher education as we knew it – but it didn’t. We adapted. Now, can we even imagine working, teaching, and learning without the tools and resources the internet provides for us in higher education? No,” said HFC Vice President of Academic Affairs Dr. Michael Nealon. “It is said that change is the only constant. Everything changes, and that can seem rather scary. Human intelligence was there to create A.I. Likewise, I believe the human intelligence exists to manage A.I., so we can learn to live well with it and to harness it for the greater good.”

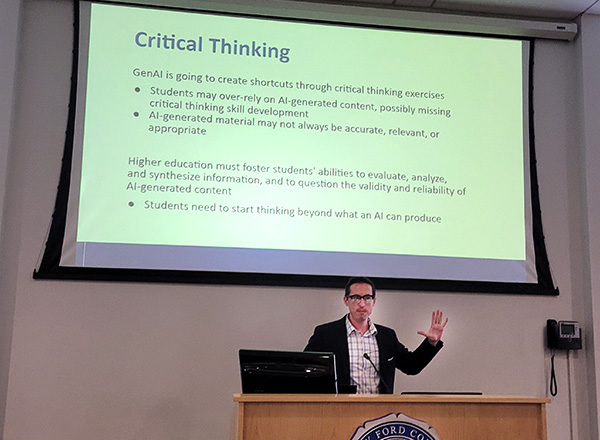

“A.I. cannot be ignored in higher education. We humans are lucky to have been alive for multiple revolutions in computer technology,” said University of Michigan-Flint Director of Online and Digital Education Nick Gaspar, one of the keynote speakers. “We have never seen anything advance so quickly or so personally until A.I. Whatever you're doing in higher education, it is likely that it can be augmented or supported by A.I.”

How creative is A.I.?

The symposium featured fascinating creative highlights:

- A.I.-generated music and a presentation about musical compositions, by HFC music instructor Anthony Lai.

- A performance of an A.I.-generated play, with the topic of a student caught cheating by using A.I. and having a conversation about academic honesty with her professor. HFC theatre instructor John Michael Sefel played the professor, and theater students played other roles.

In these cases of A.I.-generated creative content, the content was not outstanding. But it is improving daily, and will likely have an impact on creative professions, performances, expression, and intellectual property rights in the future.

One of the keynote speakers also highlighted the creative generative capacities of the medium by displaying A.I.-generated "photographs" of herself. They were reasonably convincing as actual images.

A.I. has the potential to accelerate learning, curriculum development, and research

Gaspar and fellow keynote speaker Dr. Julie Hui, assistant professor of information at the University of Michigan in Ann Arbor, reiterated that A.I. is here to stay. There are many emerging opportunities to work with it productively -- and to impact higher education.

If used correctly, A.I. is a tool that has the potential to accelerate learning for students, curriculum development for faculty, and research for the institution. If used inappropriately or without robust education and knowledge, it could lead to less learning and poorer outcomes.

“Faculty needs to build a relationship with A.I.,” said Gaspar. “It is up to you to make your own value judgments about how you want to interact with this technology.”

Some trepidation about the technology is understandable. Generative A.I. has the capacity to create realistic, original content that can enable plagiarism, cheating, and misinformation. It sometimes simply makes things up -- an outcome known as "A.I. hallucinations." Hui shared a ChatGPT scenario that claimed historical figures James Joyce and Vladimir Lenin first met in 1916. The ChatGPT-generated meeting sounded authentic, but it is false: There is no historical record that Joyce and Lenin ever met, anywhere.

Determining whether text was generated by A.I. is harder than it sounds.

“A.I. detection is an unwinnable war. The A.I.s learn from each other and adapt. Generative A.I. has a ‘yes’ bias when asked if it wrote something,” explained Hui. “If students use Ai to cheat, it’s likely because they don’t find the work meaningful or are unengaged, which leads them down the road that ends in academic dishonesty.”

Learning to work with A.I. rather than against it

Gaspar said faculty should start by accessing the learning process by feeding generative A.I. their assignments to see what develops.

- If what A.I. creates is bad, then the integrity of the assignments to achieve learning is preserved.

- If A.I. develops content that earns a passing grade, the assignments should probably be be revised.

“This is the end of the five-paragraph essay,” he said.

Hui suggested that instructors focus on helping students make sense of the complexities of writing:

- What are different ways to organize an essay?

- What are the key components of clear writing?

She developed a tool called Lettersmith to help students making sense of writing, which she believes is more important than ever now that A.I. tools can generate prose on the fly. This tool is publicly available for students and instructors.

Hui made short-term recommendations to preserve academic integrity:

- Clear policies and expectations

- Option: Honor Pledge

- Promote ethical and responsible A.I. use

- Change the weight of grades (for instance, consider alternative ways for assessment by asking students to draw from personal experience or topics mentioned during class, so A.I. cannot predict the material)

- Talk with students if fraud is suspected

“Faculty need to go beyond recall and superficial understanding,” said Hui. “Making something ‘A.I.-proof’ is an impossible standard. Faculty need to adapt rubrics to assess creativity, design, performance, and multimedia elements.”

A.I. can be a support tool that benefits students

A.I. can benefit students if used:

- As a study aid

- As an on-demand copy editor, proofreader, or code reviewer

- To generate sample quiz questions

- For rapid prototyping and storyboarding

- To prepare for how it will be used in the workplace

HFC psychology instructor Alison Buchanan and HFC English instructor Scott Still – two of the symposium’s organizers – gave their insight about the symposium. Buchanan called it a success.

“Our keynote speakers shared a wealth of information about A.I. They illustrated the power of A.I. within the educational setting while also reminding us of diversity, equity, and inclusion issues related to A.I. They provided great examples of how A.I. can be used to enhance student success, as well as strategies for keeping us as A.I.-informed as possible. The symposium was a wonderful event that allowed us to have frank and important discussions about the impact of A.I. on our lives,” said Buchanan.

“A.I. in higher education is here to stay. That’s reality,” said Still. “Replacing teachers with A.I. – that will never happen. A.I. can be used as a support tool, but it will not replace human beings. We need to have an active voice outside our own campus and find out the emerging best practices of using A.I., so we can be informed.”